Today, when we want to compare the performance of different CDN providers in a specific region, the first reflex is to check public Real User Monitoring (RUM) data, with Cedexis being one of the most known RUM provider. RUM data is very useful, and many CDN providers buy it in order to benchmark with other competitors and continuously work on improving performance.

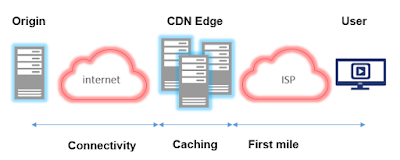

I will highlight in the following what exactly Community RUMs measure, so you do not jump quickly to some wrong conclusions. Let's focus on the latency performance KPI and list the different components that contribute to it:

- Last mile latency to CDN Edge, which reflects how near is it to the user from network perspective.

- Cache width latency, which is mainly due to CDN Edge not having the content locally and must go get it from somewhere (Peer Edge, Parent Caching or simply from the origin)

- Connectivity latency from CDN to Origin when there is a cache fill needed.

In general, Community RUM measurements are based on calculating the time (RTD) it takes to serve users a predefined object from CDN Edges. Since the object is the same and doesn't change, it's always cached on edges. In consequence, Community RUM solely measure first mile network latency, which reflects sufficiently the latency performance of very high popular objects in cache.

Nevertheless that's only a part of the picture. In real life, CDNs have different capabilities and strategies for storing content beyond Edges and filling it from origin:

- According to content popularity, CDN cache purge policy, disk space available (Cache Width) on the Edge and Parent Caching architecture, the request will be a cache miss or hit with impact on performance. VoD provider with large video library know very well this topic.

- According to CDN upstream connectivity, the number of hops needed to fill from origin impacts connectivity latency. CDNs who built their own backbone benefit from a good upstream connectivity. Dynamic content is very sensitive to this aspect.

As a final word, we also need to be aware that CDNs tend to optimize their configuration used by RUM measurement for this specific use case.

Aucun commentaire :

Enregistrer un commentaire