How can I protect my OTT platform from attacks? how can I stop bad people from stealing my content and monetizing it instead of me? Any OTT actor should be having these questions on video streaming security. In this post, I will share some answers from delivery (CDN) perspective, by building on my customers experiences. Video streaming security is tackled with respect to OTT platform & content components.

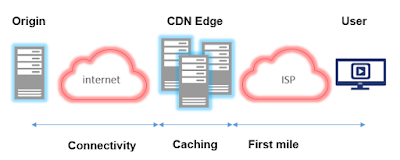

Typically, the OTT platform (origin) sits behind a CDN. As a result, bad actors will either attack the origin through the CDN or completely bypass it and attack the origin directly. I suggest the following solutions and best practices to enhance your platform security:

- Isolate the origin on a separate infrastructure from other services, like email server for example.

- Avoid using easy-to-discover FQDN of the origin (such as my-origin.ottdomain.tv) and do not expose it publicly (better use a completely different domain name).

- Whitelist on your firewall (a cloud-based firewall is even better) solely CDN IP ranges. Most CDNs can ensure that origin fill is done through a predefined IP range.

- Use CDN parent cache in order to reduce traffic back to the origin. In certain cases, when an asset has a very distributed audience, all CDN edge servers will go back to the origin to fill, which might bring it down like a DDoS attack!

- Use CDN based geoblocking in order to block traffic from countries where you do not have audience. For exemple, if not operating in Latin America, you would better block this region because it has a considerable DDoS botnet concentration.

- Understand how your CDN is capable of mitigating DDoS layer 3/4/7 attacks.

- Implement restrictions on CDN: block HTTP POST requests if not used, ignore query strings that are not part of your normal usage.

I've got a lot of cases where customers have suffered from having their content appearing on third party websites and thus loosing potential revenues. The following solutions can help protecting against content theft:

- Apply simple HTTP best practices on CDN like enforcing cross origin policy, and blocking requests based on Referrer header.

- Authenticate streams on CDN by using cookie based (pay attention to cookies device compatibility and acceptable legal framework) or path based token authentication.

- Add DRM protection to video workflow. This is the ultimate solution but comes with cost and complexity drawbacks since the DRM industry is still very fragmented and not standardized. Make sure that your CDN is compatible with the chosen DRM technology (for exemple Widevine’s WVM format requires that the CDN supports byte range requests).

- Use TLS for video delivery in order to reduce the risk of a third party sniffing your content on clear unencrypted channels. Make sure that your CDN is up to date in regards to the latest TLS security and best practices (secure cipher suits, OSCP-stapling, keep alive, false start...).

- Contact CDN & IP providers of websites which are stealing your content in order to block illegal content and dissuade them from continuing theft practices.

One last advice, common to platform and content security, is monitoring CDN logs and building some relevant security oriented analytics around it to have better insights on your streaming and take actions quickly to mitigate any abnormal behavior.